Why 550 Requests a Month Doesn’t Work for Modern AI Coding

Cursor’s Pricing Puzzle

Cursor’s 4-July “Clarifying Our Pricing” post explains that the new Pro plan’s US $20 credit buys roughly 550 Gemini 2.5, 650 GPT-4.1, or 225 Claude Sonnet calls before metering kicks in. My reaction: that covers half a power-user day, not a month. If Cursor needed a second post to spell this out, plenty of devs are lost in the math.

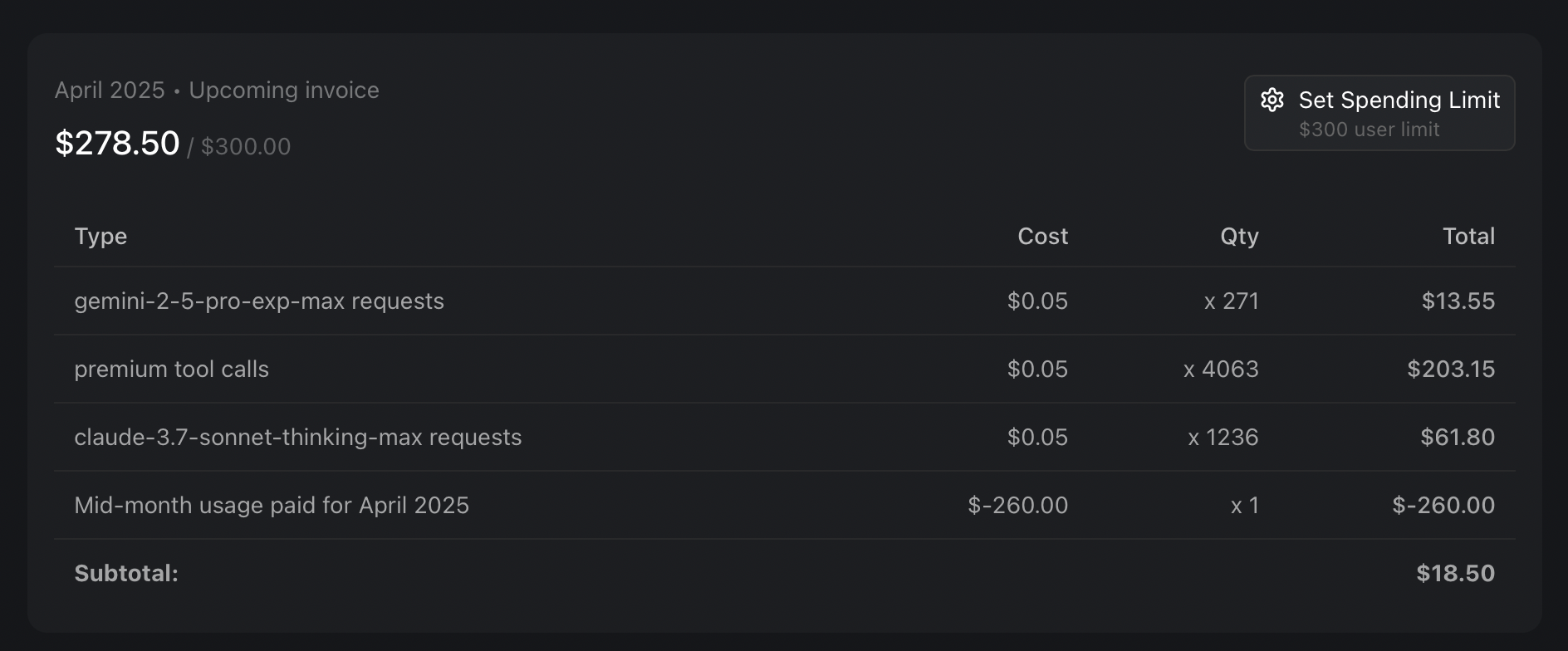

My $200 Experiment Card

Every engineer at my startup gets a $200-per-month “AI card.” I burned it when Cursor still counted “plain requests”.

(my cursor usage in April)

From One-Track to Concurrency

Cursor’s single chat pane pushed me into one-task-at-a-time mode. Claude Code blew that up.

- One terminal runs an automated edit-test-debug loop; Claude iterates until I intervene.

- A many extra terminals as possible handle planning, research, or quick gh commands (“Research X, comment on this PR with feedback based on Y”).

- GitHub Actions integration: @claude code in the browser or phone spins up a worker to review a PR or sketch a fix.

With research, coding, tests, and triage running in parallel, a 550-request ceiling evaporates. Waiting on streaming tokens feels like XKCD’s classic.

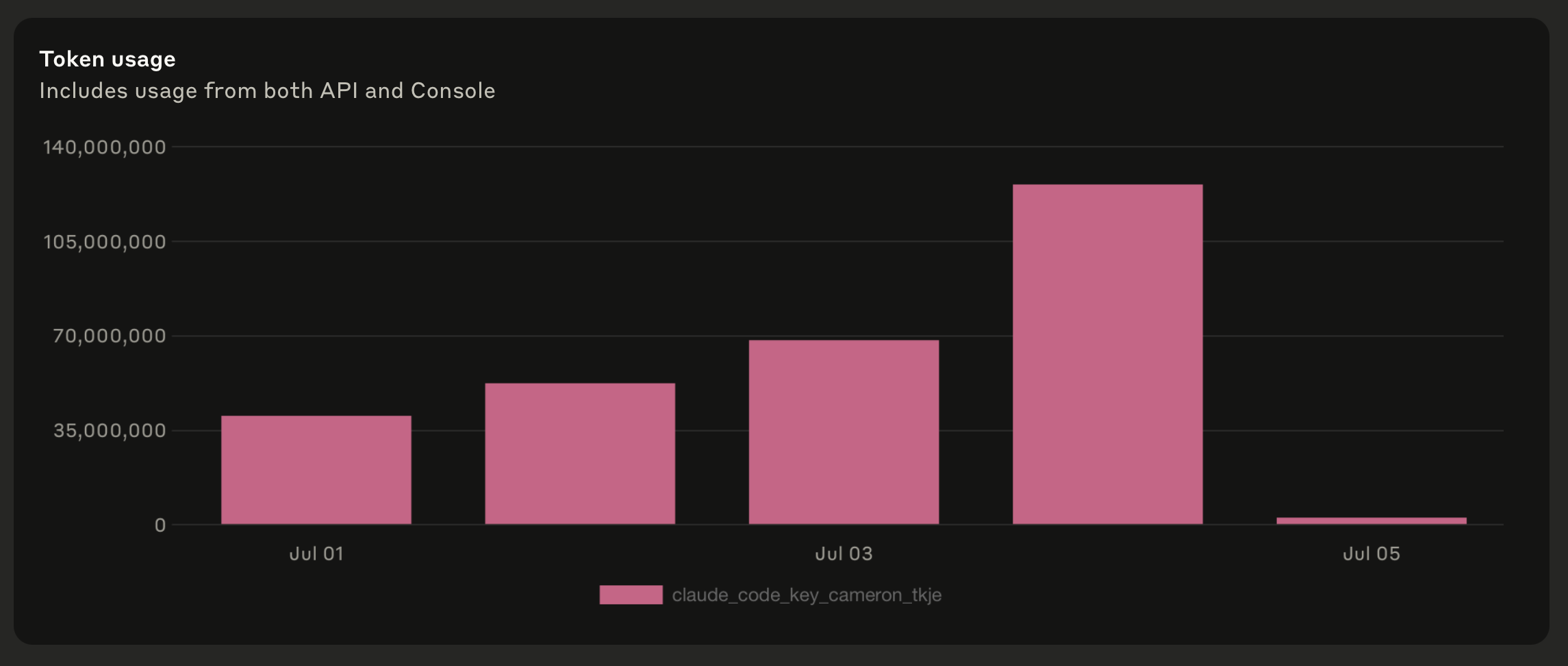

(my Claude Code token usage in July so far… 🙈)

How I See the Landscape

Frugal, Model-Agnostic

Cursor Pro (Auto on). Cheapest if you’re okay letting Cursor route to “whatever’s available.” I’m not. Was that model chosen because it’s best for the task — or best for Cursor’s margin? Mystery-meat answers break trust.

Frugal , Bleeding-Edge

Google’s Gemini freebies. The IDE plug-in alone grants 6 000 code completions + 240 chat turns every day (≈ 180 000 comps per month). The new Gemini CLI preview adds 1 000 calls per day with a 60/min burst. The CLI’s workflow isn’t Claude Code-grade yet, but the underlying Gemini Pro model is solid; if the tooling catches up I’ll switch in a heartbeat.

Spend-Happy, Model-Hopping

Cursor after the credit runs out. Perfect when I let o3 plan, Sonnet write code, and GPT-4.1 sanity-check the diff—all in one editor. Just keep an eye on the meter.

Spend-Happy, Brand-Loyal

Claude Max. Flat-fee headroom: ≈225 messages (or ~50–200 Claude Code prompts) every five hours for $100, or ≈900 / 200–800 for $200. Finance teams love predictable bills once token invoices start spiking.

OpenAI Codex preview. Plus and Pro subscribers get $5 / $50 in API credit; codex-mini runs $1.50 in / $6 out per million tokens. The CLI and cloud flavors haven’t hooked me yet; the duct-tape version of Claude Code in Actions feels more capable.

Key Takeaways

- Gemini’s free tier will siphon price-sensitive Cursor users the moment quality matches.

- Concurrency multiplies usage; 550-request caps feel prehistoric.

- Cursor Auto is cheap, but hidden model choice = distrust. “Best for you” or best for Cursor’s margin?

- Claude Max buys big headroom and a flat bill—vendor lock-in included.

- Expect to budget >$100/month if you want both model choice and parallel tasks.

- We’re in a productivity-transformation phase; hard cost caps can wait until spending hits two-grand.

- Everyone, Cursor, Anthropic, Google, OpenAI, is racing toward concurrent, agent-rich workflows. Hundreds of requests won’t survive that future.