Vibe Coding Reinforcement Learning: First Steps

Using LLMs to Break Down Barriers in Technical Learning

I’ve always been fascinated by reinforcement learning since the AlphaGo days. As an avid gamer, the concept of learning through repetitive play strongly resonates with me. But despite my programming experience, I always viewed reinforcement learning as “high science” – the hardest aspect of machine learning, beyond my reach.

That changed recently. With the rise of powerful language models enabling what Andrej Karpathy calls “vibe coding” - where you fully give in to the vibes and let LLMs handle the technical details - I started wondering: Could I use this approach to train my own reinforcement learning model?

Here’s the story of my “side side quest” (yes, that’s a side quest to my side projects) and what I’ve learned so far.

The Catalysts

Two recent developments sparked my curiosity:

- DeepSeek-R1: A Chinese hedge fund releasing a world-class reasoning model.

- QwQ-32B: A model almost an order of magnitude smaller than R1 but matching its capabilities.

Both had something in common: they used reinforcement learning techniques for reasoning adaptation. If smaller teams could achieve these results, could I—with LLM assistance—also explore this space?

Finding the Right Problem

The first challenge was identifying a suitable problem. I work with many LLM-powered applications, but wanted to be strategic about what to tackle.

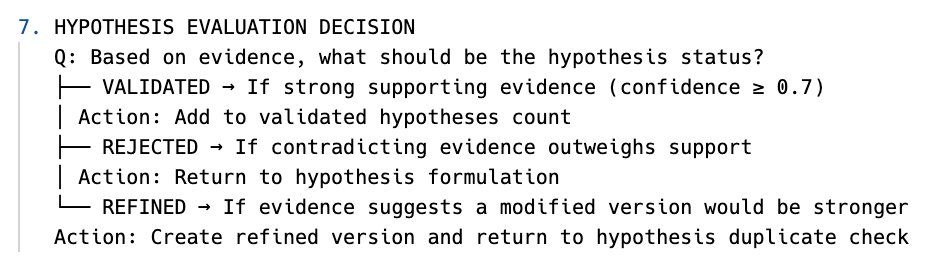

Working with Claude, I analyzed one of my agent projects, breaking it down into a detailed decision tree as shown above. This revealed a perfect candidate: the hypothesis validation component of my research system.

My current setup has:

- One model generating hypotheses

- Another gathering evidence

- A third model making a judgment: validated (with confidence ≥ 0.7), rejected (when contradicting evidence outweighs support), or refined (when evidence suggests a modified version would be stronger)

This last judgment step seemed ideal—a narrow classification problem requiring meaningful reasoning but limited enough in scope for my experiment.

The Strategy: Three-Stage Approach

I developed a straightforward plan:

- Define and generate data

- Establish benchmarks

- Improve on those benchmarks

For research, I asked Claude to help analyze papers like DeepSeek’s, distilling their key technical decisions and reasoning about how they might apply to my context. I wasn’t aiming for novel research—just applying established techniques to my specific domain.

Data Generation: Learning from the Best

Since I feel that o1 represents current state-of-the-art reasoning, I decided to use it to generate my training data. I created:

- A topic generator script in TypeScript that produced 60 topics across domains like finance, management, and risk

- A hypothesis-evidence generator that created 350 paired examples across five domains, using a curriculum approach (from easy to challenging cases)

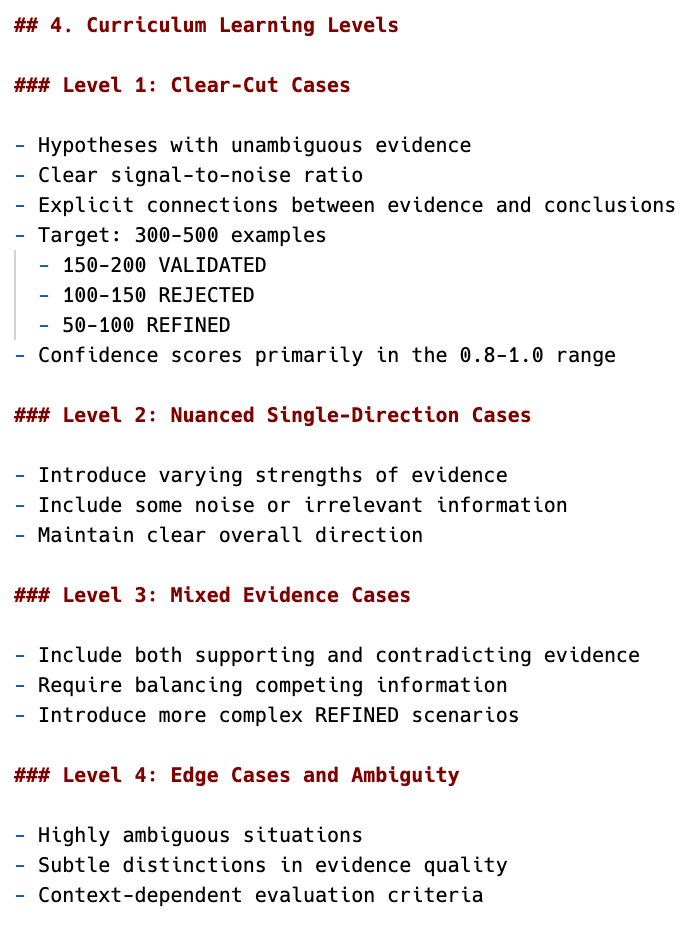

For this curriculum learning approach, I designed a progressive difficulty system as shown below:

The curriculum starts with Level 1 (clear-cut cases with unambiguous evidence) before progressing to more nuanced scenarios. This structured approach allowed me to generate training data with the right balance of validated (150-200), rejected (100-150), and refined (50-100) examples, each with appropriate confidence scores.

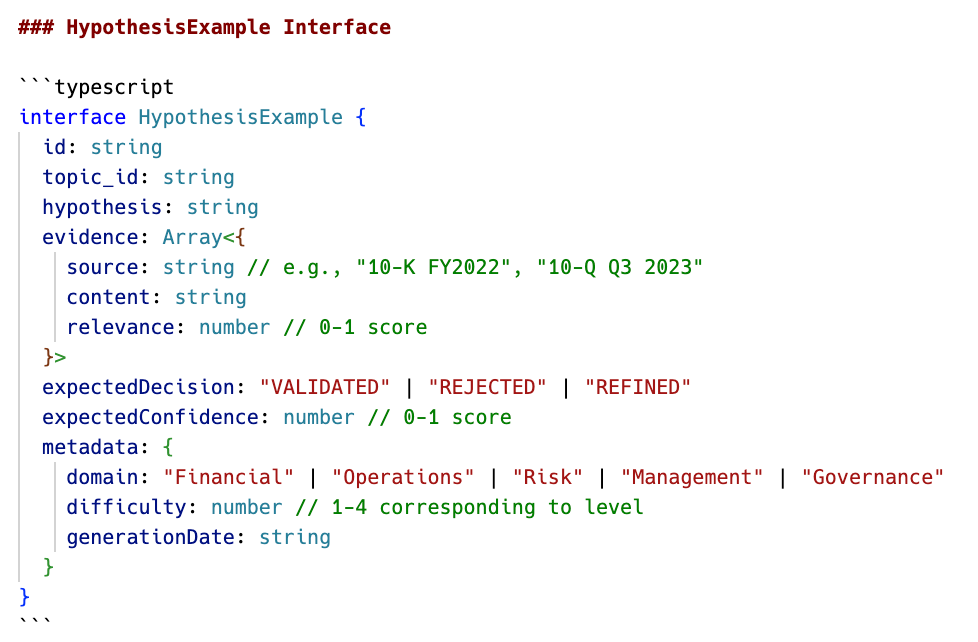

To implement this, I defined a clear TypeScript interface for each example in my dataset:

Taking the time to design this interface upfront proved to be one of the most valuable strategic decisions in the project. By defining this structure before generating any data, I was able to leverage OpenAI’s structured output capabilities in their API, essentially giving the model a precise “template” to fill out.

This approach dramatically streamlined the data generation process. Instead of getting freeform text that would need extensive parsing and cleaning, I received perfectly structured JSON objects that mapped directly to my TypeScript interface. Each example includes the hypothesis text, an array of evidence pieces (with source, content, and relevance scores), expected decision (VALIDATED/REJECTED/REFINED), confidence score, and metadata tracking the domain and difficulty level.

This is vibe coding at its best - spending your mental energy on designing the right structure, then letting the AI handle the repetitive generation work while maintaining perfect consistency. It made it possible to systematically generate, evaluate, and eventually train on these examples with minimal friction.

I also implemented a quality control step where the model itself would judge whether generated examples were good, creating a feedback loop until I had solid samples.

Interestingly, hypotheses in the risk domain kept getting rejected by the QA system. After discussing this with Claude, we concluded that risk might be inherently incompatible with “level one” difficulty’s requirements for unambiguous evidence and clear signal-to-noise ratio—a fascinating insight to shelve for later.

Benchmarking: Setting a Baseline

For benchmarks, I selected two models:

- QwQ-32B (state-of-the-art reasoning with moderate size)

- Qwen2.5-3B-Instruct (a very small model)

My hypothesis was that if I could significantly improve the smaller model’s performance, it would deliver real value through faster speed and lower costs.

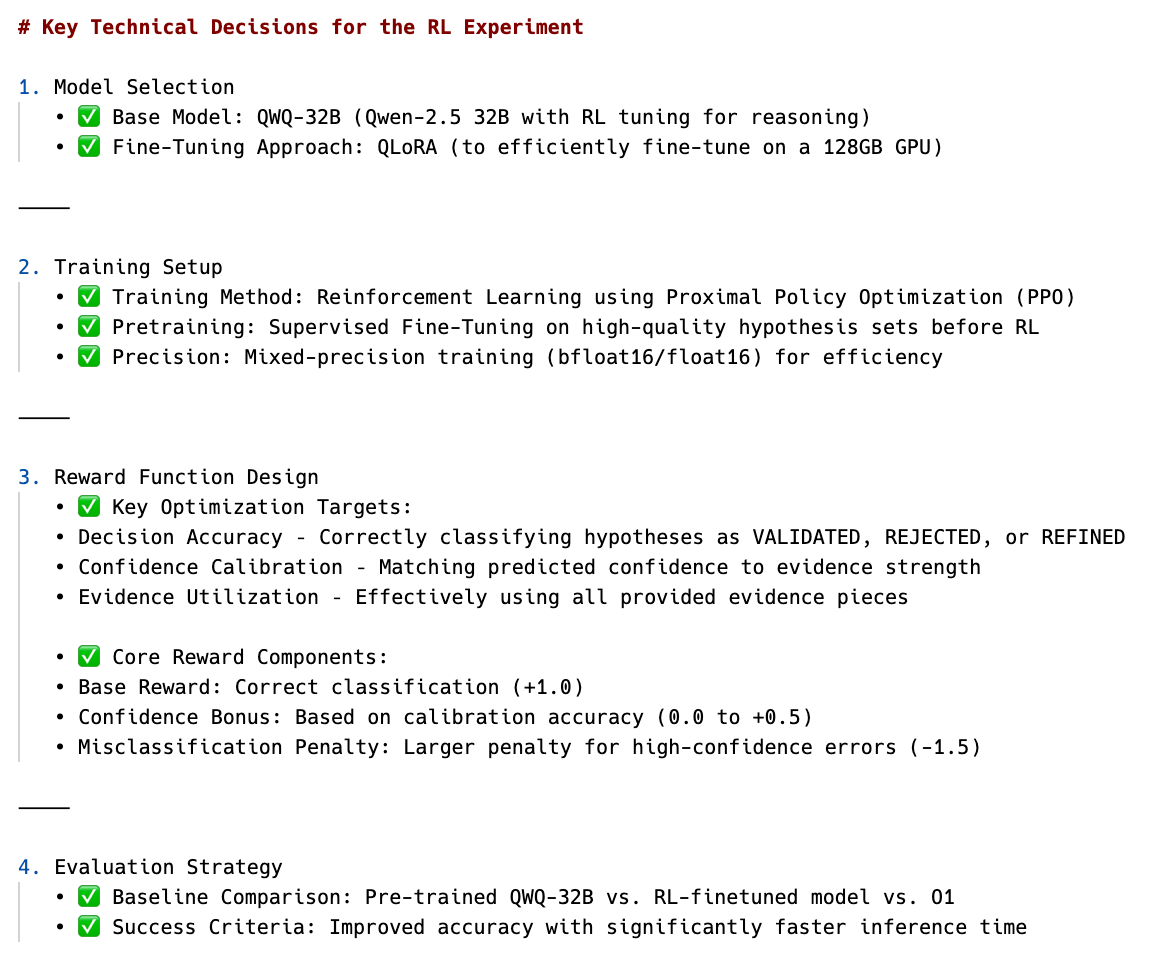

To guide this experiment, I developed a structured plan of key technical decisions:

These decisions reflect my approach - I researched state-of-the-art techniques like QLoRA and Proximal Policy Optimization, allowing me to plan a sophisticated reinforcement learning project despite not being an ML specialist.

With Claude’s help, I set up a Python environment using Hugging Face transformers. It was great. I asked questions, followed suggestions, and let the LLM guide me through unfamiliar libraries while maintaining just enough critical thinking to ask the right questions. We built:

- Model wrappers optimized for my M4 Max’s 128 GB RAM

- A dataset loader to efficiently manage the training data

- An evaluator to test and log model performance

Current Status: The Wait Game

After running a quick sniff test with five samples, the smaller model predictably performed worse than QwQ-32B. But how much worse? That’s what I’m waiting to find out as the full benchmark runs on my laptop (estimated 30 hours).

Depending on the gap between models, I’ll decide whether reinforcement learning is worth pursuing. If we’re already above 85% accuracy, maybe not—but if there’s significant room for improvement, I’ll continue the journey.

The Big Takeaway

The most striking part of this experiment is how accessible it’s been. I’ve made significant progress with only 6-7 hours of total investment. While I’m not an ML expert, I feel surprisingly confident in what I’m doing.

Five years ago, trying to train your own reinforcement learning model as a side project would have seemed absurd without specialized knowledge. Today, with the right LLM partners, you can tackle domains that were previously impenetrable.

I’m not sure where this side quest will lead, but I’m already convinced that the barriers to technical experimentation have fundamentally changed. These first steps into reinforcement learning would have been impossible for me without LLM assistance.

In future posts, I’ll share the benchmark results, training process, and what I learn about whether this vibe coding approach can produce a genuinely useful model. If you’ve been curious about a technical domain but thought “that’s not for me”—maybe it’s time to reconsider.