Raw Feedback

I had an early test user trying out my app, and he sent me three Loom videos. One of them showed a genuine bug—he was working through a list of tasks, hitting reject on one, accept on another, and somehow the whole thing kept resetting. Very frustrating. The other two videos? Just him expecting a capability we didn’t have.

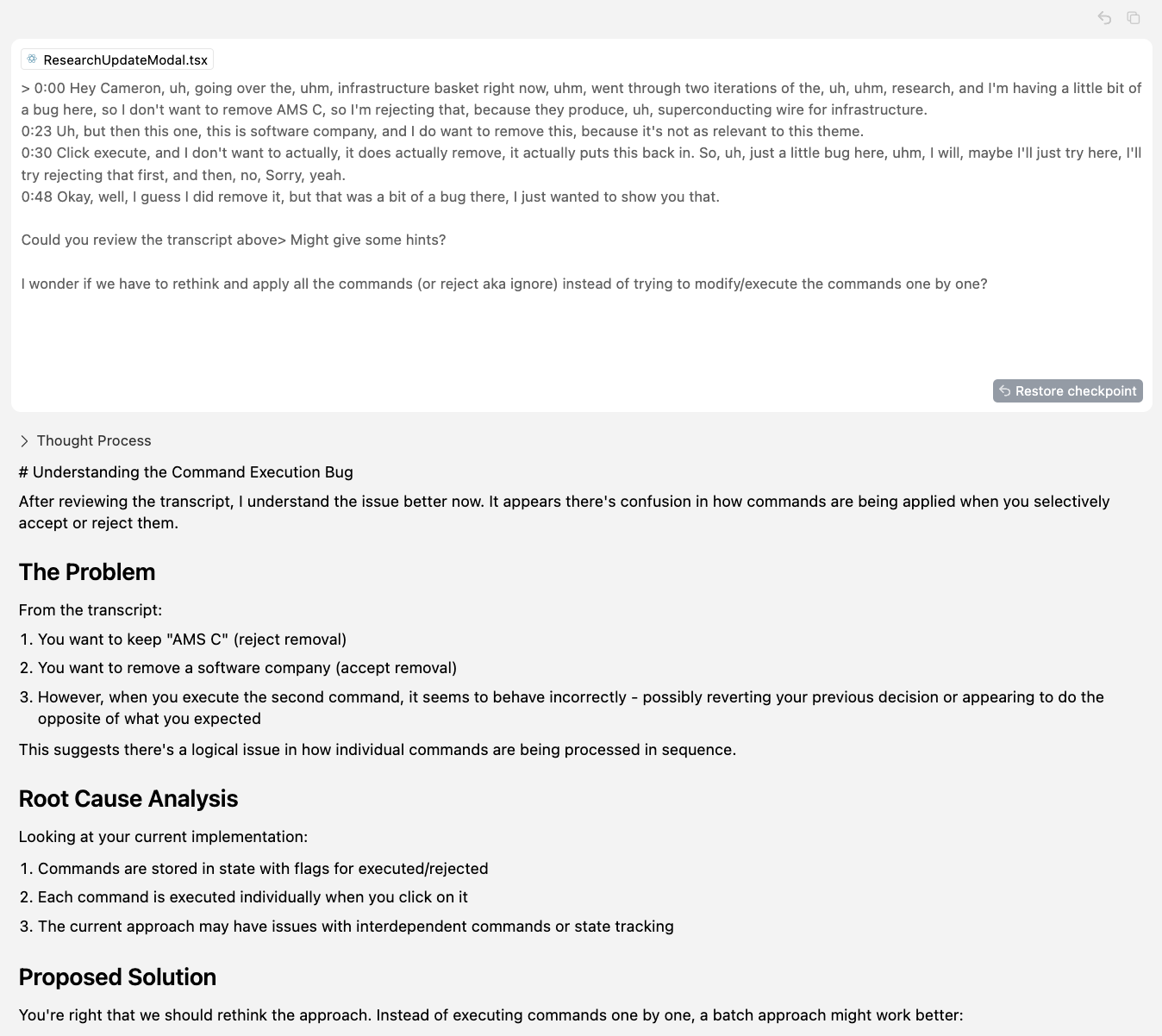

The first bug was obvious. It wasn’t the expected behavior. But instead of jumping straight into debugging, I went to Cursor. I told it, “A user is having an issue with this form, here’s what he’s doing, can you investigate?” I had it in ask mode, not agent mode—I didn’t want it to fix anything, just research. It came back with a possible explanation, but I wasn’t convinced.

Then I got an idea. I didn’t feel like writing a proper bug report, didn’t want to spell out the issue step by step. So I copied his Loom transcript—messy, vague, full of mumbling—and threw it into Cursor. And immediately, it just… got it. It didn’t know the root cause yet, but it understood the problem better than before.

From there, I started thinking: should this workflow change? Instead of applying changes immediately when the user reviews an item, should we queue them up and apply them all at once at the end? I tossed that into the AI, and it gave me an analysis of the current approach versus my idea. We refined it a bit, then I switched into agent mode and had it write up the plan. Now I’m working on it.

What stuck with me wasn’t just that the AI helped debug—it was how naturally the user data flowed into it. I didn’t have to interpret the users feedback, structure it, or clean it up. He mumbled his way through a bug, and that was enough. It makes me wonder: what if user feedback could automatically trigger debugging sessions or even draft fixes?

Not saying we’d act on it blindly. But the fact that we even could? That’s interesting.